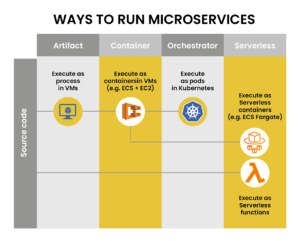

Microservices are the most scalable way to develop software. But it makes no sense unless we choose the right way to deploy microservices. When it comes to microservices architecture, there are so many choices, and it’s hard to know which one is the best. Let’s see what are the best options available to deploy microservices and understand them in more detail to know when these are important.

1. Deploying Microservices With Virtual Machine

When an application exceeds the capacity of a server, we can scale up (upgrade the server) or scale sideways (add more servers). When we use microservices, scaling horizontally across two or more machines makes more sense as we get better availability as a bonus. And, once we have a distributed setup, we can always scale up by upgrading the servers. But horizontal scaling is not without its problems. Going past a machine brings up a few key points that make troubleshooting more complex.

How do you correlate log files distributed among many servers? Or how do we collect sensible metrics or handle upgrades and downtime? Or how do we handle spikes and drops in traffic? Distributed computing or microservices also has inherent problems and begins to experience as soon as you add more machines.

Benefits Of Deploying Microservices With VM

- An important advantage of VMs is that each service instance runs in complete isolation. It has a fixed amount of CPU and memory and cannot steal resources from other services.

- Deploying microservices as VMs allows for leverage of mature cloud infrastructure. Clouds, including AWS, provide valuable features such as load balancing and autoscaling.

- Another advantage of deploying a service as a VM is that it encapsulates the implementation technology of the service. Once the service is packaged as a VM, it becomes a black box. The Management API of the VM becomes the API for deploying the service. Deployment becomes simpler and more reliable.

Drawbacks Of Deploying Microservices With VM

- One drawback is less efficient resource use. Each service instance has the overhead of the entire VM, including the operating system. Furthermore, in a typical public IaaS, VMs come in fixed sizes, and the VMs can be used less.

- In addition, a public IaaS usually charges for VMs regardless of whether they are busy or idle. IaaS like AWS offers autoscaling, but it is difficult to respond quickly to changes in demand. As a result, you often have to overprovision VMs, which increases the cost of deployment.

- Another downside of this approach is that it is usually slow to deploy a new service version. VM images are usually slow to build due to their size. Also, VMs are generally slow to accelerate due to their size. Furthermore, it usually takes some time for an operating system to start up. Note, however, that this is not universally true as there exist lightweight VMs such as those built by Boxfuse.

- Another shortcoming of the Virtual Machine Per Service Instance pattern is that you (or someone else in your organization) are usually responsible for a lot of unobtrusive heavy lifting. You are responsible for using a tool to handle the overhead of building and managing the VMs. Though the process is necessary, it is a very time-consuming activity that distracts you from performing your core business activities.

2. Deploying Microservices With Containers

Containers mitigate shortcomings of deploying microservices with VMs. As the container packages the application with all the required dependencies a program needs to run, it becomes a self-contained unit that runs on any server without installing any dependencies or tools except containing runtime. Containers or dockerizing an application offers enough virtualization to run software in isolation. There are two ways to run containers: directly on servers or a managed service.

Read more what is software containerization?

Containers on servers replace processes and provide greater flexibility and control. It sets the foundation for another option to distribute the load across machines. Whereas managed service or serverless containers such as AWS Fargate allows software companies to run containerized applications without thinking about servers. You can build a container image and point it to the cloud provider, who will care for the rest, such as provisioning virtual machines and downloading, patching and upgrading, and monitoring images. Managed services come with built-in load balancers that make things easier and more efficient.

Benefits Of Deploying Microservices With Containers

- No Server: There is no need to maintain or patch the server.

- Simplified Deployment: All you need to do is build a container image and tell the service to use it.

- Autoscaling: Serverless functionality enables autoscaling with more capacity or vice-versa when traffic spikes or stops.

- Isolation: Each container and the OS with a private file system are isolated from one another. It eliminates dependency conflicts as long as you are not abusing volumes.

- Concurrency: You can run multiple instances with a single container image without conflicts.

- Less overhead: Containers are lightweight than VMs as it doesn’t require booting an entire OS.

- No-install deployments: There is no installation step required since you download a container image and run.

- Resource control: You define CPU and memory limits on containers; hence it doesn’t destabilize the server.

Drawbacks Of Deploying Microservices With Containers

- Vendor lock-in: Vendor lock-in is inevitable with managed services that always make transitioning to another service challenging.

- Limited resources: You can’t avoid imposed CPU and memory limits with managed services.

- Less control: The cloud vendor provides and controls most of the infrastructure with managed services. Hence you don’t have the same level of control you possibly get with other options.

However, containerizing an application is suitable for small and medium-sized microservices applications. Any container option will be suitable for small to medium sized microservices applications. If you are comfortable with your vendor, a managed container service is more manageable, as it takes care of many of the details for you. For large-scale deployments, equations get changed. Once you get to a specific size, managing the growing number of microservices feels burdensome. More likely, your team asks for orchestrated solutions to achieve productivity and performance of the application.

3. Deploying Microservices With Orchestrators

Orchestrators are platform specific in distributing container workloads over a group of servers. The best-known orchestrator is Kubernetes, a Google-created open-source project maintained by the Cloud Native Computing Foundation. Orchestrators are platforms used to distribute container workloads over a group of servers. Kubernetes is a widely adopted orchestrator that helps with container management. Kubernetes deployment strategies are highly scalable and help meet diverse innovative deployment requirements of an enterprise.

Benefits Of Deploying Microservices With Orchestrators

- Orchestrator or Kubernetes provides extensive network features such as routing, load balancing, security, centralized logs, etc., you need to run your microservices application.

- With Kubernetes, you write manifest and let the cluster take care of the rest.

- This orchestration platform is supported by all cloud providers and is considered the absolute best way to deploy microservices.

Drawbacks Of Deploying Microservices With Kubernetes

- Complexity: Kubernetes has a difficult environment requiring a steep learning curve to seize its true potential. Kubernetes’s complexity is inescapable, and it is important to be familiar with the environment; otherwise, it can be overkill.

- Administrative Burden: Yet again, Kubernetes installation requires significant expertise. Although the top cloud providers offer managed clusters that take away much administration work, it still takes in-depth understanding to define a complex Kubernetes infrastructure to simplify the application. Security, logging, redundancy, and scaling are all built into the Kubernetes fabric; hence it adds an administrative burden on the team.

- Skill set: Kubernetes development requires a special skill set. It can take weeks to understand all the moving parts and learn how to troubleshoot a failed deployment. Transitioning to Kubernetes can be slow and reduce productivity until the team becomes familiar with the tool.

Kubernetes is the most popular choice among companies deploying applications using containers. In such scenarios, choosing an orchestration platform may be the only way forward. However, before making the jump, ensure you find specialized engineers for your project requirements.

4. Deploy Microservices As Serverless Functions

Serverless functions are yet another way to deploy microservices. Instead of processes, containers, or servers, serverless functions allow you to use the cloud and run code on demand. For example, AWS Lambda provides all the infrastructure required to run scalable and highly-available services, freeing the team to focus on writing quality code.

Benefits Of Deploying Microservices As Serverless Functions

- Convenient pricing: You only pay for your work with a serverless deployment. If there is no demand, there is no charge.

- Ease of use: You can deploy tasks on the fly without compiling or building container images, which is great for trying and prototyping things.

- Easier to scale: You get (basically) infinite scalability. The cloud will provide enough resources to meet the demand.

Drawback Of Deploying Microservices As Serverless Functions

- Vendor lock-in: Serverless computing service works on the cloud; hence when you go with it, you get locked with a particular vendor for providing technology implementation. Therefore, it makes it taxing to move out in the future quickly.

- Cold starts: Cloud providers spin down the resources attached to unused functions. Hence, infrequently used functions might take a long time to start when deployed using serverless functions.

- Limited resources: As a managed service, serverless functions are allocated little memory and time. These can’t be long-running processes; hence, you must keep this factor in mind while developing microservices applications.

- Limited runtimes: Only a few languages and frameworks are supported in serverless functions. Though the cloud providers are expanding their offering, you may have to change your decision to use a language you are not comfortable with to go with serverless services.

- Imprevisible bills: You may experience a nasty surprise with serverless functions. It is a usage-based cloud service, and sometimes it is hard to predict the size of the invoice at the end of the month.

Conclusion

Deploying microservices applications is challenging. There are tens or hundreds of services written in different languages and frameworks. Each is a mini-application with its own specific deployment, resource, scaling and monitoring requirements. Therefore, a cloud consulting company must evaluate the pros and cons of each service and its specific use-case to determine which deployment method best suits the application architecture and supports higher functionality.