The software development industry has been revolutionized by application containers, which offer developers a flexible and scalable means of developing, deploying and managing microservices. As containerization has gained popularity, the use of application containers has become more widespread, resulting in a wide range of different use-cases explored and adopted in different industries. Let’s check some facts about the application containerization market size and growth expected to reach in the near future.

- The forecast period from 2020 to 2028 is expected to see a Compound Annual Growth Rate (CAGR) of 32% in the application container market.

- According to Gartner, by 2023, over two containerized applications will be run by 70% of organizations.

- Global revenue from container management is predicted to rise from $465.8 million in 2020 to $944 million in 2024, starting from a relatively small base.

These statistics indicate that the containerization trend is not only gaining momentum but is also set to continue its rapid expansion in the years to come.

What Is Containerization?

Containerization of an application means packaging the application and its dependencies into a container, which is a lightweight, stand-alone executable package that includes everything the application needs to run. This includes the code, runtime, system tools, libraries, and settings. When an application is containerized, it can be run consistently across different environments, as all of its dependencies are included in the container. This makes it easier to develop, test, and deploy the application, as well as to scale and manage it in production.

By containerizing an application, it can be deployed to various hosting environments like on-premises, cloud, or hybrid environments without any compatibility issues. Additionally, containerization allows for efficient resource usage, as multiple containers can run on a single host, sharing resources such as the host’s kernel. This makes it more cost-effective and efficient than traditional virtualization, where each virtual machine has its own operating system and resources. Moreover, containerization allows for easier scaling and management of the application, as containers can be easily added or removed to match changing resource requirements. This makes it possible to quickly and easily scale up or down the number of containers running an application as needed.

Importance Of Container-Based Virtualization

Container-based virtualization is important because it allows for efficient and consistent deployment of applications and services. Containers package all the necessary dependencies, libraries, and configurations for an application to run, ensuring that it will run the same way regardless of the environment it is deployed in. This makes it easier to develop, test, and deploy applications, as well as to scale and manage them in production. Additionally, containers use fewer resources than traditional virtual machines, making them more cost-effective and efficient.

How Container-Based Virtualization Works

Container-based virtualization works by using a container engine, such as Docker, to create and manage containers. Each container is a lightweight, stand-alone executable package that includes everything an application or service needs to run, including code, runtime, system tools, libraries, and settings.

Containers are built from images, which are snapshots of a container’s configuration and state at a given point in time. Images can be created from scratch or from existing images and can be shared and reused across different environments.

When a container runs, the container engine creates a new container instance from the specified image. It assigns its own isolated namespace for resources such as networking and storage. The container runs as an isolated process on the host operating system and shares the host’s kernel, but has its own file system and environment variables.

Because containers share the host’s kernel and resources, they are lightweight and efficient in comparison to virtual machines. This makes it possible to run many more containers on a single host than on virtual machines.

Additionally, Container orchestration tools like Kubernetes, Docker Swarm, and Mesos help in managing large-scale deployments, scaling, load balancing, and self-healing of the containers.

List Of Layers of Containerization

There are different layers of containerized technology with a specific set of functionality and capabilities that work together to enable containerized transformation. Let’s take a closer look at how different pieces of containerized technology work together to enable transformation.

- Hardware Infrastructure: It all begins with physical compute resources somewhere and enables container orchestration platforms to be used. These platforms allow for managing and automating the deployment, scaling, and management of container hardware infrastructure. Typically, you create nodes that are physical or virtual machines on which the containers will run.

- Host Operating System: The next layer is the host operating system which provides the foundation for containerization technology. The host operating system is responsible for managing the hardware resources, such as memory and CPU, and providing the necessary drivers and system calls to run the containerized applications.

- Container Engine: This is where things start to get interesting as Docker gets introduced in this layer, which is responsible for creating, managing, and running the containers. The container engine provides the necessary tools and interfaces to create and manage containers and allows for the sharing of resources between the host and the containers.

- Application Container: The top layer is the application container, which is the actual executable package that contains the application and its dependencies. The application container is a lightweight, stand-alone executable package that includes everything the application needs to run, including the code, runtime, system tools, libraries, and settings.

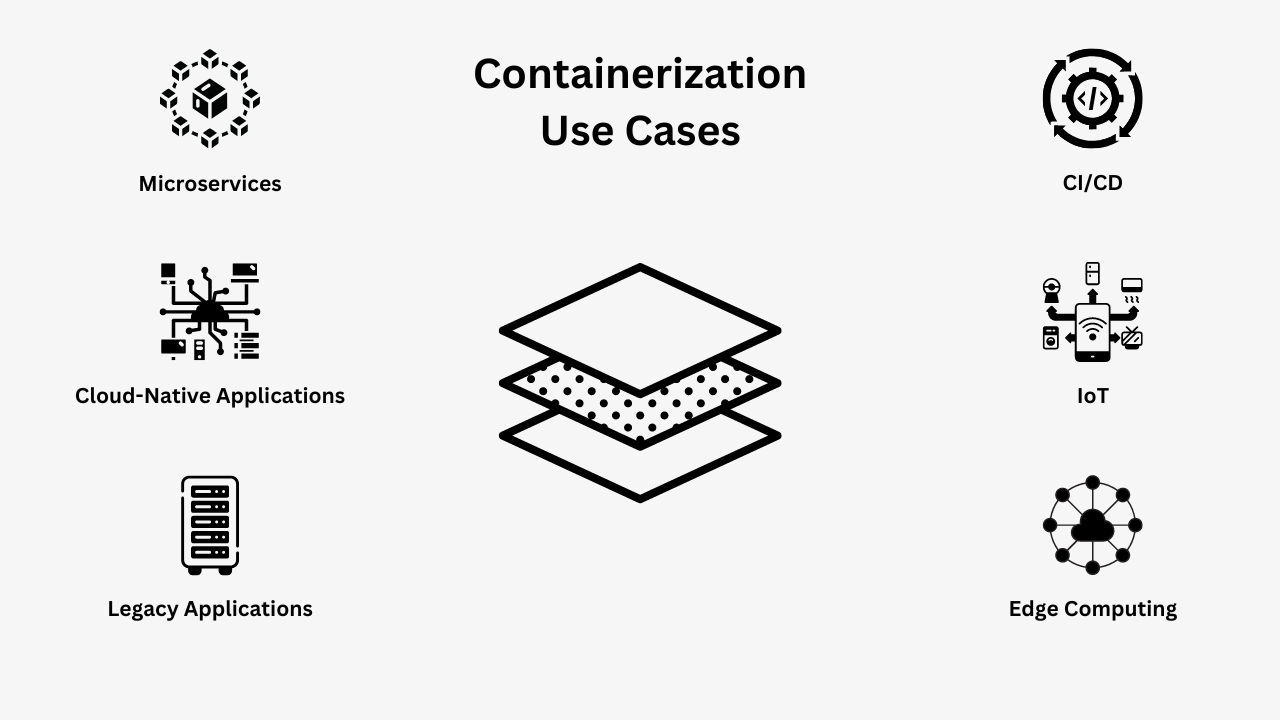

Specific Containerization Use Cases

Containerization offers several benefits such as portability, simplicity of deployment, speed, scalability, efficiency, and others, but understanding its real-world use cases allows you to put the knowledge into practice. Let’s check the popular containerization use cases:

- Microservices: Containerization is commonly used to deploy microservices-based architectures, where a single application is broken down into smaller, independently deployable services. This allows for more efficient scaling and management of the application and reduces the risk of issues in one service affecting the entire application.

- Cloud-Native Applications: Containerization is also commonly used in cloud-native applications, which are designed to take advantage of the scalability and flexibility of cloud environments. Containerization allows for easy deployment and scaling of the application in a cloud environment and helps to ensure consistency and portability of the application across different environments.

- Legacy Applications: Containerization can also be used to modernize legacy applications, which may be difficult to deploy and manage in a traditional environment. Containerization allows for the isolation and packaging of the legacy application, which makes it easier to deploy and manage the application in a modern environment.

- CI/CD: Containerization allows for efficient implementation of continuous integration and continuous delivery (CI/CD) pipeline, where changes in the codebase can be automatically built, tested, and deployed to production.

- IoT: Containerization can also be used in IoT (Internet of Things) applications, to manage the deployment and scaling of the application on devices with limited resources.

- Edge Computing: Containerization can be used for edge computing where the computation is done close to the source of data to reduce latency and improve performance.

Henceforth, containerization provides a consistent and predictable environment for the application to run in and simplifies the deployment, scaling, and management of the application.

How Cloud Platforms Support Application Containerization?

Cloud platforms are offering a range of services, products, and features specifically designed to support application containerization needs such as:

- Container Orchestration: Cloud platforms such as AWS offer several managed container orchestration services such as EKS, ECS, and Fargate that enable developers to build, deploy, scale, and manage containerized applications faster and more efficiently. As a managed service, these cloud solutions automate several crucial steps such as load balancing, auto-scaling, and self-healing that help to ensure the availability and performance of the application.

- Container Registry: Amazon Elastic Container Registry (ECR) and Google Container Registry (GCR) are container registry services that make it easy to store, manage, and distribute container images. As these services are provided by cloud platforms, these offer features such as image scanning and vulnerability management that ensures the security of the images.

- Serverless Computing: There are serverless computing solutions provided by cloud platforms, including AWS Lambda, Google Cloud Functions, and Azure Functions. By leveraging them, developers can run containerized applications without needing to put effort into provisioning and managing servers. Cloud platforms offer far better and more cost-effective options to run containerized applications.

- Networking and Security: Cloud platforms support storage, compute, and other software development needs including network and its security. Henceforth, developers have solutions available like load balancers, firewall rules, and encryption as part of networking and security that automate several processes while ensuring the application’s availability, performance, and security.

- Monitoring and Logging: As containerization supports and promotes distributed environments, cloud platforms also offer several monitoring and logging solutions to check the performance and health of containerized applications. You can also look into the real-time performance of the application which assists in identifying and troubleshooting issues.

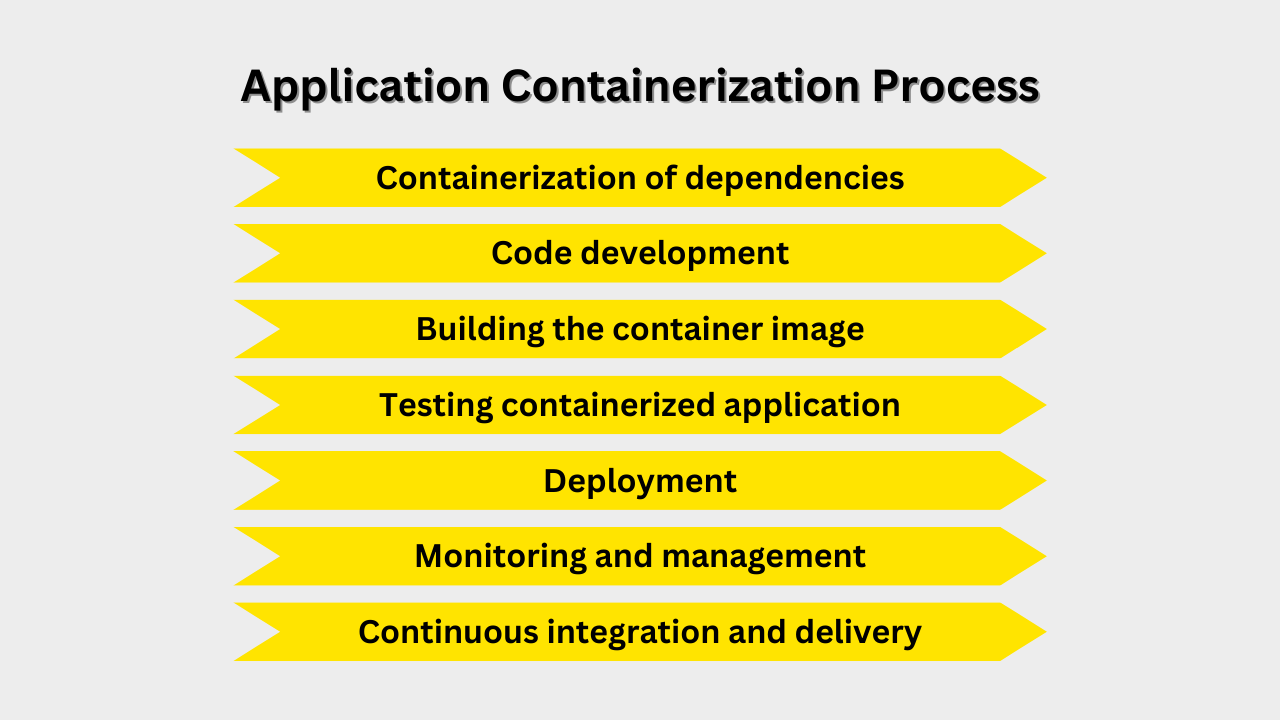

What Does An Application Development Process Look Like With Containerization?

The app development process with containerization typically includes the following steps:

- Containerization of dependencies: The first step is to containerize the dependencies of the application, such as runtime, system tools, libraries, and settings. This is typically done by creating a container image, which is a snapshot of the container’s configuration and state at a given point in time.

- Code development: The application code is developed and tested locally.

- Building the container image: The next step is to build the container image of the application. The image contains the application code and the containerized dependencies. This image can be stored in a container registry for future use.

- Testing: The containerized application is tested locally using the same image that will be used in production.

- Deployment: The containerized application is deployed to a container orchestration platform like Kubernetes, Docker Swarm, or Mesos. These platforms help in scaling, load balancing, and self-healing of the containers.

- Monitoring and management: The deployed containerized application is monitored for its performance and any issues, and management tasks like scaling, updates, and rollbacks can be easily done using the container orchestration platform.

- Continuous integration and delivery: The containerization process makes it easy to implement a continuous integration and delivery pipeline, where changes in the codebase can be automatically built, tested, and deployed to production.

How Containerization Allows A Streamlined Application Development?

Overall, containerization allows for a more efficient and streamlined app development process by providing a consistent and predictable environment for the application to run in and simplifying the deployment, scaling, and management of the application.

Let’s understand with an example how containerization allows a streamlined app development process:

Imagine a company is developing a new web application that uses a specific version of a programming language and several libraries. Without containerization, the company would need to ensure that the exact same versions of the programming language and libraries are installed on every developer’s machine, the testing environment, and the production environment. This can be difficult to manage and can lead to compatibility issues.

With containerization, the company can create a container image that includes the specific version of the programming language and all the required libraries. This image can be shared among all the developers and used in the testing and production environments. This ensures that the application will run consistently across all environments, eliminating compatibility issues.

This way containerization allows the company to focus on the development and functionality of the application, rather than dealing with environment-specific issues, which results in a more streamlined app development process.

Let’s understand this in another scenario:

Developing a containerized application can begin without having to rent a full virtual machine. Since the container uses the virtualized environment of the operating system, it uses the operating system kernel, with each container containing the application and the software required to run the application (settings, libraries, storage, etc.)

Containerization allows numerous applications to be deployed across a single host operating system without the need for their own virtual machine, which helps save a lot of money. Earlier, if a server had to host five applications it had to deploy five virtual machines that would have five copies of the operating system running in each virtual machine, but now 10 containers can share the same operating system.

With increased portability, application containerization also offers significant cost reduction. Because there is no need to wait for the operating system to boot up, this can speed up the process of testing apps across different operating systems. Furthermore, if the application crashes during testing, the isolated container rather than the full operating system is shut down.

The advantage of application containerization is that it allows containers to be clustered together for easy scaling or to collaborate as microservices. If one application needs to be updated or replaced in the latter situation, it can be done independently of the other applications and without shutting down the entire service.

What Is Container Security And Why Is It Required?

Containers are replaced frequently which makes the processes associated with remediating vulnerabilities much simpler. Frequently ripping and replacing containers is beneficial for delivering new functionality as well as applying patches. But the process generates a large number of containers and the frequency of their updates makes container security more complicated. The introduction of vulnerabilities is a possibility with every update. Implementing security to containers here helps protect against the potential vulnerabilities and threats that can arise from the isolation, scale, multi-tenancy, and shared resources of containerized applications.

- Image Security: Most images, even those that are custom-made, are built on third-party code and thus at risk of third-party vulnerabilities. Henceforth, it is important to ensure that the container images are free from vulnerabilities and malicious code.

- Avoid Developing Mega-Container: Mega-containers can become very large and unwieldy, making them difficult to manage and scale. Besides that, the larger the container becomes the more potential vulnerabilities it may have. Therefore, you must avoid developing a mega-container.

- Integrate with CI/CD Pipeline and Secure Your Host Environment: One of many ways to shift security left is to combat vulnerabilities before deployment. And to do that, one of the best practices is to integrate container security scanning tools with CI/CD platforms to identify any potential runtime security issues prior to deployment.

Conclusion

Container-as-a-Service (CaaS) or application containerization is a powerful method that allows for the efficient and consistent deployment of applications and services. Packaging all the necessary dependencies, libraries, and configurations for an application to run into a container ensures that the application will run the same way regardless of the environment it is deployed in. This makes it easier to develop, test, and deploy applications, as well as to scale and manage them in production. Additionally, containers use fewer resources than traditional virtual machines, making them more cost-effective and efficient.

CaaS also provides a wide range of benefits when used in conjunction with cloud computing, such as automation, cost-effectiveness, high availability, security, and integration. Cloud providers offer a comprehensive set of services and features that are specifically designed to support containerized applications, making it easy to deploy, scale, and manage containerized applications in a cloud environment.

Overall, container-as-a-service is a powerful technology that can be used in a wide range of use cases, including microservices, cloud-native applications, legacy applications, CI/CD, IoT, and Edge computing, to provide a consistent and predictable environment for the application to run in, and to simplify the deployment, scaling, and management of the application.