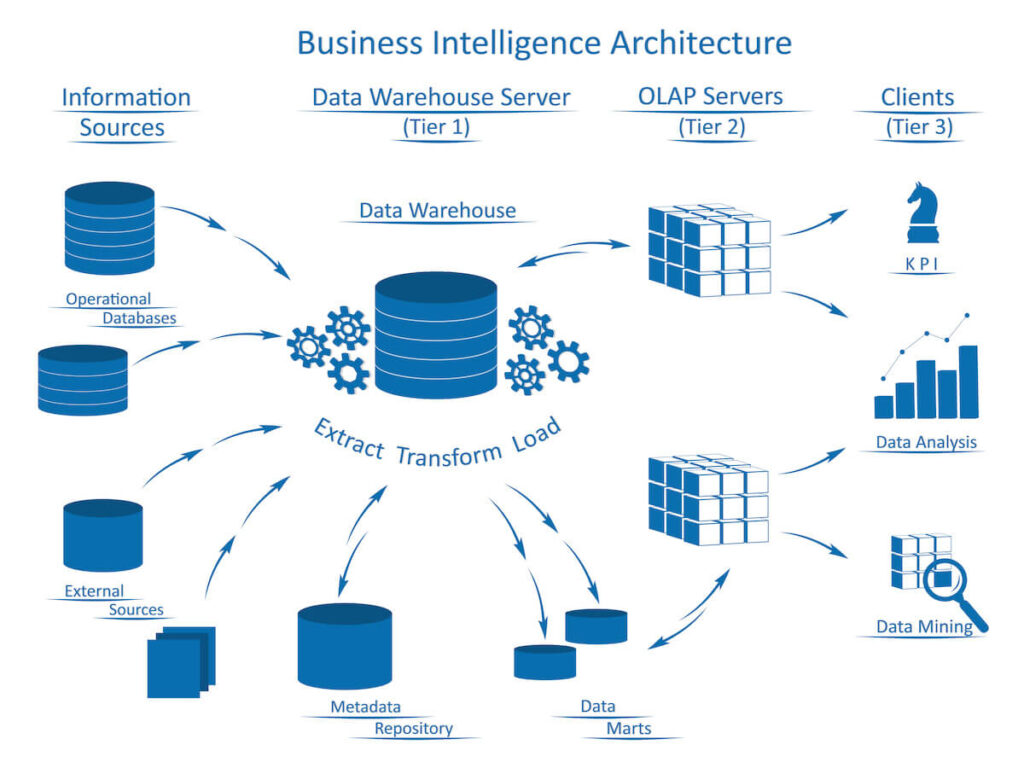

An ETL (Extract, Transform, Load) process is an automated way to move data between different databases. This process allows for the extraction of data from one database into another, transforming the data, and then loading it into the destination database. In order to maintain quality control, organizations must perform regular ETL processes. These processes help ensure that data is clean, accurate, and complete before being used by other systems. If there is no ETL process in place, data will not be properly cleaned or transformed. As a result, the data will be inaccurate and incomplete.

The importance of ETL in an organization is in direct proportion to how much the organization relies on data for analytics and decision-making. Data warehouses grew as the amount of data grew in the past couple of years, and ETL has proliferated and become more sophisticated. Since new profiles and teams have emerged within organizations and are highly dependent on data insights to perform their day-to-day activities to be productive, developing or rewriting ETL processes is crucial to meet the business objectives. One another force driving the ETL modernization for digital transformation is the emergence of cloud-based data storage and data operations.

Data storage and transformation were done primarily in on-premises data warehouses. However, the cloud has revolutionized the way data is stored and processed forever. Companies that have been collecting and using data for decision-making have experienced a continued upsurge in the amount of data. What’s more interesting is that there are increasingly sophisticated tools have emerged that enable the use of data to gain real-time insights into business and customers.

Traditional data warehouse infrastructure cannot scale to hold and process that much data – at least not in a cost-effective and timely manner. If we want to perform high-speed, sophisticated analytics and intelligence on all of our data, the cloud is the only place to do so. Cloud data warehouses like Amazon Redshift, Snowflake, and Google BigQuery can scale up and down infinitely to accommodate almost any amount of data. Cloud data warehouses also support massively parallel processing (MPP), which enables the coordination of huge workloads into horizontally scalable clusters of computational resources. The on-premises infrastructure simply doesn’t have that speed or scalability. The cloud changes how we handle data and how we define and deliver ETL.

Significance of ETL Process In A Business

As data integration becomes agile, custom options for ETL are gaining acceptance. For example, streaming data through a pipeline is based on business entities, not database tables. Here, in the beginning, the logical abstraction layer captures all the characteristics of a business entity from all the data sources. Thereafter, the data is collected, refined, and stored in a final data asset.

The requested entity’s data is retrieved from all sources in the extraction phase. In the transformation phase, the data set is filtered, anonymized, and transformed according to predetermined rules, for example, digital entities. Finally, the sets are distributed to the large data store in the load phase.

Such an approach processes thousands of business entities in a given time frame and assures enterprise-grade throughput response times. Unlike batch processing, this approach continuously captures data changes in real time from multiple source systems. These are then further streamed through the business entity layer to the target data source. Ultimately, data collection, processing, and pipelining based on business entities produce fresh and unified data assets.

Steps To Build An ETL Process

Although ETL stands for Extraction, Transformation, and Loading, these are performed in hierarchical ways in different categories to convert raw data into insights. Let’s check them and understand how ETL processes work:

1. Copying The Raw Data

Just like other software development projects, ETL development processes also begin with considering the details of the system and creating design patterns. Since batch processing is a widely popular and accepted method due to its speed and popularity and for providing an informational advantage when an issue occurs, there are still many methods that should be followed before the transformation stage for several reasons:

- Since it’s not possible to get control over the source system during the transitions between executions, a developer copies the raw data.

- Usually, a system is different from the system the developer works on. Taking unnecessary steps while extracting records can adversely affect the source system, which further impacts the end users.

- Having raw data at your disposal will help expedite the process of finding and solving problems. As the data moves, debugging becomes quite difficult.

- Local raw data is an excellent mechanism for auditing and testing throughout the ETL process.

2. Filter The Data

The next step is to filter and fix the bad data. It is the inaccurate record that will become the main problem at this stage, which you need to solve by customizing the information. We advise you to accompany the bad data in the source documents with a “Bad Record” or “Bad Reason” field flag for convenient classification and evaluation of incorrect records from future processing. Filtering will enable you to narrow down the result set in the last month of the data or ignore columns that contain nulls.

Image source: Abode

3. Transform The Data

This step is the most difficult in the ETL development process. The purpose of transformation is to translate the data into warehouse form. We recommend you make the changes in steps: first, add keys to the data, then add calculated columns, and finally combine them to form aggregates. With sets, you can interact with information in every possible way: sum, average, find the desired value, and group by column.

This process will help you have a logical summary of each step and add “what and why” comments. Combining multiple conversions can vary depending on the number of steps, processing time, and other factors. The main thing is that you do not allow them to be quite complicated.

4. Loading Data Into A Warehouse

After the transformation, your data should be ready to be loaded into the data warehouse. Before downloading, you have to decide on its frequency and whether the information will be downloaded once a week or a month. This will affect the work of the data warehouse, as the server will slow down during the loading process, while the data may change. You can deal with changes to existing records by updating them with the latest information from data sources or by keeping an audit trail of changes.

How We Helped Build ETL Process For A Global Media & Advertising Company

We built the ETL process while working on a project for one of our media and advertising clients. They wanted to use company-owned data for better decision-making, process improvement, and operational excellence. They wanted a unified system embedded with modern data technologies to use them to manage, measure, control, analyze, and leverage logs.

Since we dealt with 200+ data sources, we needed to collect information in one centralized place to develop an ETL process. To meet the client’s expectations and business objectives, our development team analyzed each source’s data format and wrote an entire program for pulling the data into Azure Blob Storage, where it transformed into a standard format. When working with massive information, our ETL developers faced challenges like storage of extensive batched data, automating data pipeline deployment, rewriting business logic, and deploying them while meeting unique operational requirements that were essential to address value-generating systems.

As an Azure consulting partner, we leverage our Azure cloud expertise to build and deploy the data pipeline. With the help of Azure Blob Storage, Azure Data Factory, and Azure Data Lake Storage, we created cloud-based ETL and data integration services allowing clients to consolidate data from 200+ sources. We orchestrated data pipelines, transformation, and preparation using Airflow and Trifacta. Our team uses React.js for UI development and Microsoft Power BI to enable clients to avail interactive paginated reports and data visualization experience. Successive helped the client streamline their entire media workflow-from client onboarding to visualizing campaign data for better decision-making and operational excellence. As a result, we successfully completed the ETL process, which increased productivity in working with information and created a consolidated database on which to build complete data visualization and analytics processes. Read the complete story and step-by-step approach taken for building ETL Process For A Global Media & Advertising Company.